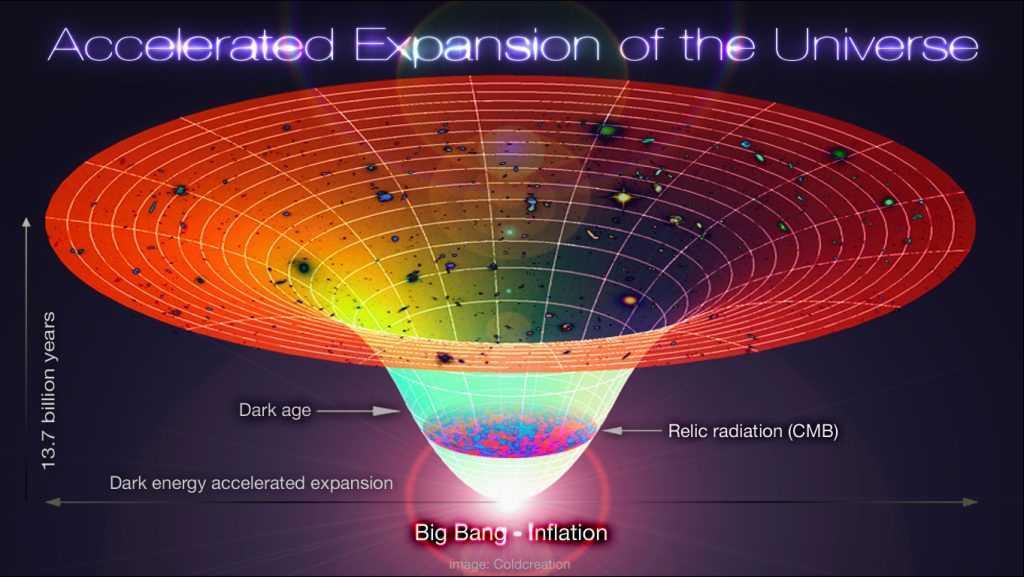

These are interesting times for cosmologists. On the one hand, general relativity and the cosmological principle of homogeneity and isotropy on sufficiently large scales has led to an incredibly powerful cosmological model (Lambda Cold Dark Matter — LCDM) whose predictions have been confirmed to extremely high precision with the latest generation of surveys of the cosmic microwave background (the Planck satellite, the South Pole Telescope, and Atacama Cosmology Telescope) and of large scale structures (the Sloan Digital Sky Survey). On the other hand, there are immense questions to be answered, and in particular: what is the nature of the mysterious dark matter and dark energy that pervade the universe, and that has left cosmologists wondering whether there could be a massive hole in their apparently so perfect model?

I think most cosmologists would agree, that even though the LCDM model is so good at explaining and predicting a wide-variety of observations, something deep is missing at least in the fundamental understanding of these components (i.e. currently the LCDM model is only a parametric description of nature) and that one expects* that at some point, with larger and larger datasets of ever increasing precision, something will break down. Particularly exciting today is that we may be on the verge of (yet another) breakthrough in cosmology: our ever-so-perfect cosmological model is failing at describing recent measurements of two fundamental quantities describing the universe. First and foremost, the expansion rate of the universe known as “the Hubble constant” has been measured to be significantly larger than the prediction, and second, the amplitude of fluctuations (quantifying the “clumpiness”) in the local universe is much smaller than the prediction. These “cosmic tensions” may hold the first hints of the true nature of Dark Matter and Dark Energy.

There are some important caveats to be said about these tensions before claiming that

LCDM has failed. First, one may ask whether the “statistical significance” of the discrepancies justify qualifying these measurements to be in tension with LCDM. In other words, could it be just a mere chance fluctuation, as it can occur in life, that when performing a measurement of a given quantity, it is estimated slightly off from its true value. Interestingly, this is becoming increasingly unlikely. In fact, it is now basically impossible to attribute the “Hubble Tension” to a statistical fluke, as the chance of a random fluctuation are now below one in a million. The “sigma8 tension” (as cosmologist calls the “clumpiness of the universe” tension) on the other hand is still at a statistical level of (roughly) one in a hundred chance of a random fluctuation, and therefore in all fairness, could still be due to an unlucky measurement. In addition, there could still be intrinsic measurement errors that bias the current estimates, and make it look like there is an issue with LCDM, when in reality one should just correct its instrument or its analysis. This possibility is extremely actively investigated by cosmologists, and so-far, there is no consensus on whether a systematic error could explain away this measurement. In fact, it seems that one would need to invoke multiple systematic errors acting in conjunction to fool cosmologists in order to explain these measurements, giving hopes that these discrepancies are truly due to a failure of our model.

With that, one may ask whether there are models that could explain these data, and what it could mean for our understanding of dark matter and dark energy. The answer is: it’s complicated. Just as there is no consensus on whether a measurement or modeling error could be at play, there is yet also no consensus on what models can (if any) explain these measurements. In fact, one must admit that it is very hard to explain the value of the Hubble constant precisely because we have so many other high-precision datasets that seem to fit our LCDM model so perfectly. When one tries to adjust one handle to match the Hubble constant measurement, another dataset typically becomes discrepant with the resulting model, leaving poor cosmologists in a state of dissatisfaction (and I try!). I will leave the discussion of models attempting to resolve the Hubble Tension for another time, or another author, and focus for now

on the topic of the recent seminar I gave in the context of the CosmoVerse series, that deals rather with a model dedicated to resolving the “sigma8 tension”.

On paper, contrarily to the Hubble Tension, resolving the sigma8 tension is fairly “easy”. By this I mean that, while we have a large number of high-accuracy datasets, there is a window left open where the freedom to play with the model is large, and can be used to (at least theoretically) explain the fact that our local universe appears less clumpy than the LCDM model predicts. This is because our best measurement, those which agree well with the LCDM model, span mostly large-scales (here, scales or distances greater than ~30 Millions light-years), while measurements that attempt at measuring the “clumpiness” of the local universe are mostly sensitive to a range of scales precisely smaller than that, down to about 1 Millions light-years or so. In practice, however, there are limitations to this statement of simplicity. This is because on the one hand making predictions at very small scales is complicated (gravity becomes “non-linear”, coupling multiple scales in the game, and the effect of forces beyond gravity due to “baryons” such as supernovae, active galactic nuclei etc., may become important) and second, because it is not fully true that the window at small-scales is fully open. There are important other measurements at those scales that may constrain your favourite model that one should check (I think in particular of the “Lyman-alpha forest” or the milky way “satellite galaxies”), although the rule-of-thumb is that these are less constraining or at least robust than the usual large scales ones. Models that have been suggested typically suggest new properties of dark matter and/or dark energy, or modifying gravity. One of my favourite explanations is that Dark Matter may be unstable over cosmological time-scales, decay away into a lighter particle, and as it decays, acquire some velocity, that would allow it to escape gravitational potentials and reduce the local “clumpiness”. Another possibility, is that in the past, Dark Matter interacted with some light particles, similar to what happened to standard model baryons (the mixture of electrons and protons) which interacted with photons. This interaction damps structure at small-scales, but leave the very large scales unaffected, leaving us today with less structures in the local-universe. All these models are being actively studied by cosmologists.

Filamentous structures of galaxy and galaxy clusters in the universe. If dark matter in the universe is hot (left panel) there are less structures than if it is warm (middle panel) or cold (right panel).

Credit: ITC @ University of Zurich

However, I want to focus on a third interesting possibility: what if Dark Matter and Dark Energy could “talk” to each other? This sort of scenario was long thought of as a way to explain why the measured density of dark energy is so similar to that of Dark Matter specifically in the current epoch. Indeed, given that we know very little about dark energy, and that its impact can be dramatic for the universe (and for the existence of life!), it is quite natural to ask whether there is a dynamical mechanism explaining its current value rather than mere chance, or a “cosmic coincidence” (as it is called nowadays). As it turns out, there are strong constraints on models in which the energy density of Dark Matter and Dark Energy varies significantly over time in a way different than the typical behaviour expected in LCDM, because as said before, our observations (except for these cosmic tensions) are in very good agreement with LCDM. It is in fact debated whether a transfer of energy from Dark Matter to Dark Energy is permitted by the data as a way to explain the Hubble Tension. Currently, the data indicates that it is disfavoured but this remains an interesting possibility. However, the constraints are much weaker when it comes to allowing for a momentum-exchange (as opposed to energy-exchange) between the two components. The reason is that Dark Matter is cold, and a momentum exchange does not significantly affect its energy density. However, it does affect how the small perturbations at the origin of the structures we see today grow, and such interactions can be used to reduce the amplitude of fluctuations in the local universe- therefore helping to explain the “sigma8 tension”. This is an interesting possibility, and in fact it has been shown by several authors that this may work! The momentum exchange can pass the stringent constraints from the cosmic microwave background and seems to adjust the observations made in the local universe.

Moreover, as argued during my talk, there is an interesting “coincidence” that I believe to be of particular interest, and remind of the first “cosmic coincidence” mentioned previously: our datasets are such that they confine the interaction to be significant specifically at the time at which Dark Energy starts dominating the energy density of the universe (i.e., specifically when the energy density of dark matter and dark energy becomes of the same order). Had it been much stronger, the amplitude of fluctuations would be so low, that there would be no structures in the local universe. Had it been much weaker, we would not see its impact on the data. This second “coincidence” suggests that the sigma8 tension may be connected to the much older first “coincidence problem”, providing us with new hints towards model-building dark matter and dark energy. This hint is just a first step that needs much more data and accurate theoretical predictions to be tested, and may very well be invalidated with better data. However, if it is confirmed, it could have tremendous implications for our understanding of the Dark Universe and would pave the way toward building a theory that unifies dark matter and dark energy. We are still only at the dawn of building and testing such a theory. But one thing is certain, whether or not this specific model is correct, if the cosmic tensions that we see today withstand the test of continuously improving data — as these are coming within the next decade with the plethora of surveys that just started (DESI, JWST, Euclid, Vera Rubin Observatory among many others)— our beautiful LCDM model is in big trouble. And yet, from the likely failure of a model, we will be in a position to make tremendous progress in our understanding of the mysteries of our big and dark universe. Interesting times indeed.

*to be fair, it is possible that dark energy is just a cosmological constant, and that dark matter, even though its fundamental nature is unknown, behaves at the cosmological level *exactly* like cold dark matter, down to the smallest scales, with only gravitational interaction. This “nightmare” scenario, although possible, is not fun. Let’s hope the universe is fun